Large Language Models (LLMs) are the foundation of modern AI assistants.

But one of the most common misconceptions in the market is this:

“Just pick the best LLM — and everything will work.”

In reality, no single LLM is best at everything.

Different tasks require different strengths:

speed, reasoning depth, cost efficiency, multilingual support, or structured output.

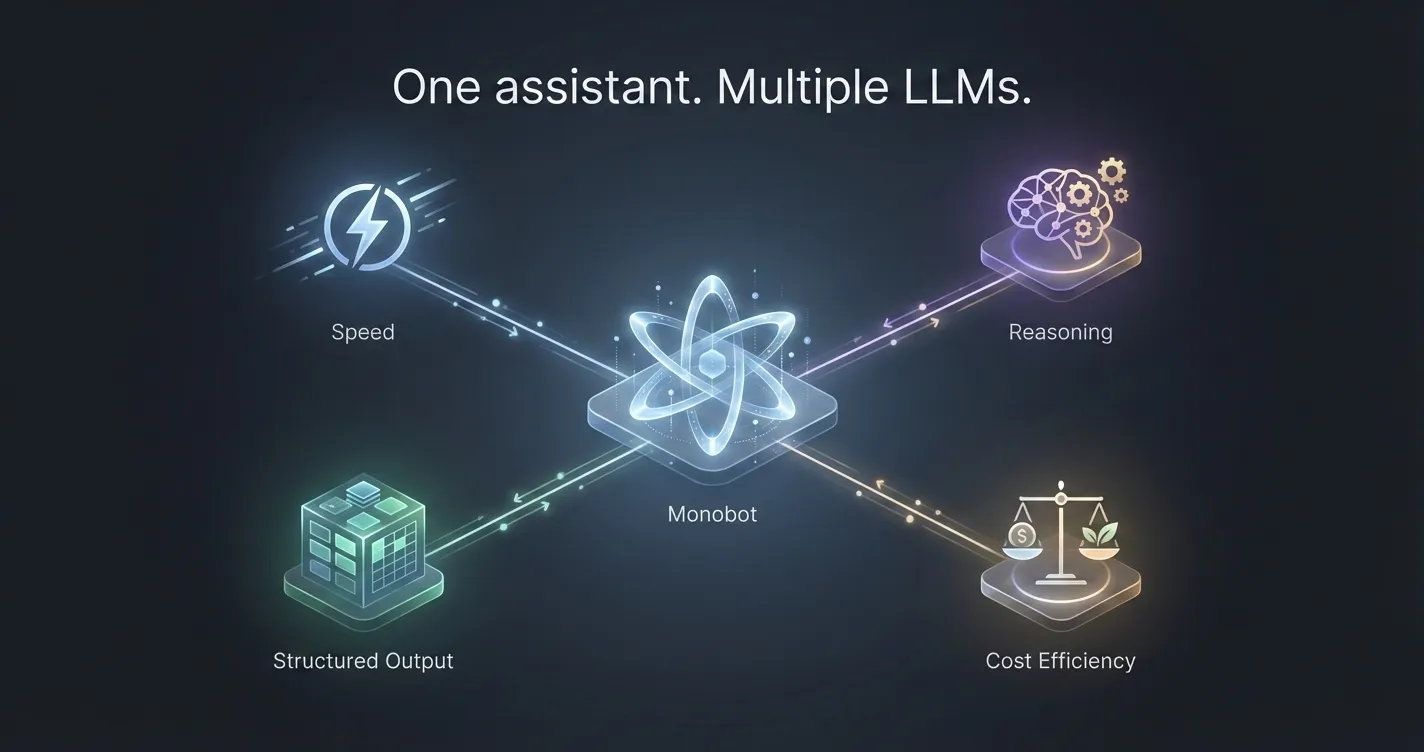

That’s why Monobot is designed to work with multiple LLMs, selecting the right model for each specific job — instead of forcing everything through one.

One Model ≠ One Solution

LLMs vary significantly in how they perform:

- Some are faster but less precise

- Some reason deeply but are slower

- Some are great at conversation, others at structured data

- Some are cost-efficient at scale, others are premium

Using one model for all scenarios often leads to trade-offs:

- higher costs

- slower responses

- lower accuracy in critical flows

In production environments, these trade-offs matter.

How Monobot Uses Multiple LLMs

Monobot is built as a model-agnostic platform, which means:

- We are not locked into a single provider

- Different models can be assigned to different tasks

- Models can be swapped or updated without redesigning the system

This flexibility allows Monobot to adapt as models evolve — and they evolve fast.

Matching the Model to the Task

Here’s how multiple LLMs are typically used inside Monobot:

1. Conversational Flow & Voice Interactions

Some tasks prioritize:

- low latency

- natural dialogue

- stable conversational tone

For these, Monobot can use models optimized for real-time interaction, especially in voice scenarios where delays break the experience.

2. Reasoning-Heavy or Decision-Based Tasks

Other scenarios require:

- multi-step reasoning

- intent disambiguation

- complex logic validation

In these cases, Monobot can route requests to more advanced reasoning models, ensuring accuracy over speed.

3. Structured Outputs & Business Actions

When the assistant needs to:

- extract structured data

- validate inputs

- trigger workflows

- call APIs

The priority is consistency and reliability, not creativity.

Monobot assigns models that perform best with:

- schema-based outputs

- deterministic responses

- strict formatting

4. Cost-Optimized High-Volume Requests

Not every interaction requires a top-tier model.

For:

- repetitive questions

- simple confirmations

- status updates

Monobot can use lighter, more cost-efficient models, dramatically reducing operational costs at scale.

Why This Matters in Production

Using multiple LLMs is not about flexibility for developers —

it’s about stability, performance, and cost control for businesses.

With a multi-model approach, Monobot can:

- reduce latency where speed matters

- improve accuracy where mistakes are expensive

- scale without exploding costs

- avoid dependency on a single vendor

- adapt instantly as better models appear

This is especially critical for voice assistants, customer support, and automation-heavy workflows.

Future-Proof by Design

The LLM landscape changes monthly.

New models appear.

Existing ones improve or decline.

Pricing shifts.

Capabilities evolve.

Monobot is designed so that the assistant stays stable even when models change.

Businesses don’t need to rebuild their logic every time the AI ecosystem moves forward — Monobot absorbs that complexity.

Final Thoughts

The future of AI assistants is not about choosing the best LLM.

It’s about building systems that can:

- use the right model for the right task

- evolve without breaking

- stay efficient, accurate, and reliable in production

That’s why Monobot uses multiple LLMs — and why this approach matters far more than most people realize.